For this lab we were given the task of developing a navigational map for the Priory, a plot of land owned by the university on the outskirts of Eau Claire that contains a building, forest, and elevation changes. The purpose of this lab is so that we can create a map containing enough information needed to successfully find a number of navigation points in the Priory area, much like an orienteering course, only instead of being given a map, we needed to make it ourselves.

The assignment included creating two maps, one using the Geographic Coordinate System (GCS) and the other the Universal Transverse Mercator (UTM) to help give an idea of which coordinate system is better suited for navigation. Both of these are known as the two major global coordinate systems. The UTM in its nature preserves the shape of a given area, allowing for nearly perfect accuracy in measurement of up to 1 meter, thus this projection is most often used for navigational purposes. In addition, this projection is divided up into 60 different vertical zones.

|

| (Figure 1) UTM zones |

|

| (Figure 2) US UTM Zones. Eau Claire, WI falls in the 15N zone, and was therefore the zone that was chosen for this lab. |

GCS uses latitude and longitude to determine location and it uses the decimal degree system which is described using degrees, minutes, and seconds, which, unlike UTM, is not a basic unit of distance.

In order to be able to use our map in the field without measuring tape or lasers, we conducted a pace count for ourselves to see how many steps it would take us to walk 100 meters. We measured this by using a laser which digitally calculated the distance from one end of the desired field to the other. We proceed to walk the 100 meters while counting every time your left foot hit the pavement; this was done one more time to ensure accuracy. My personal pace count for 100 meters is 72 steps.

Methods

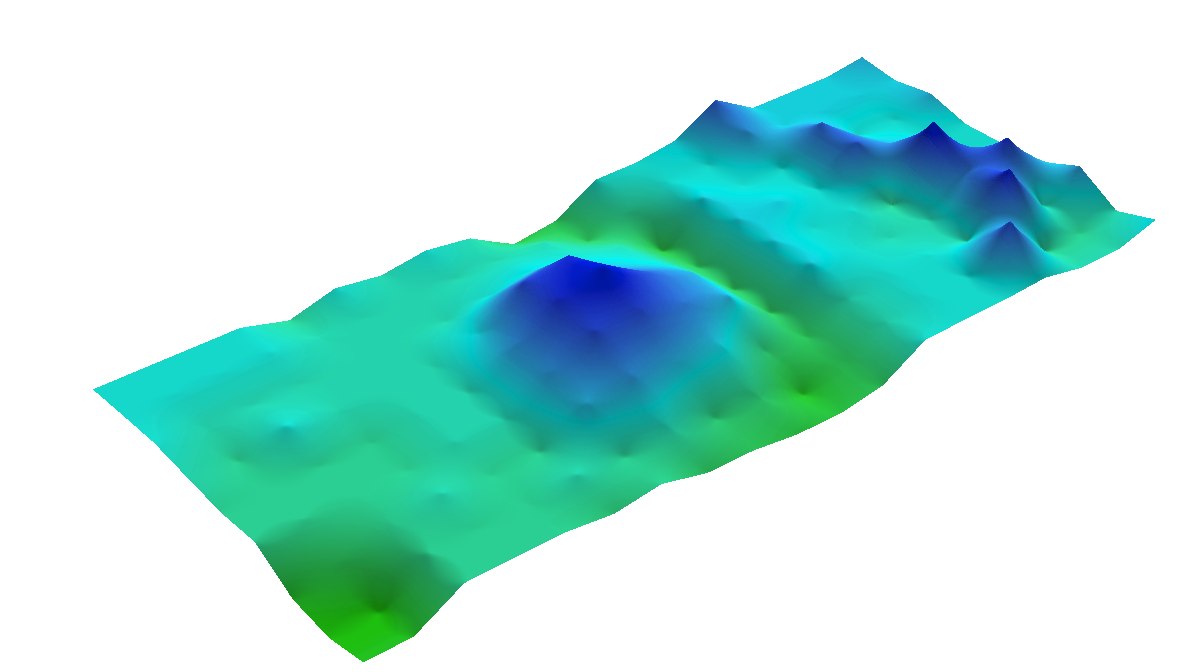

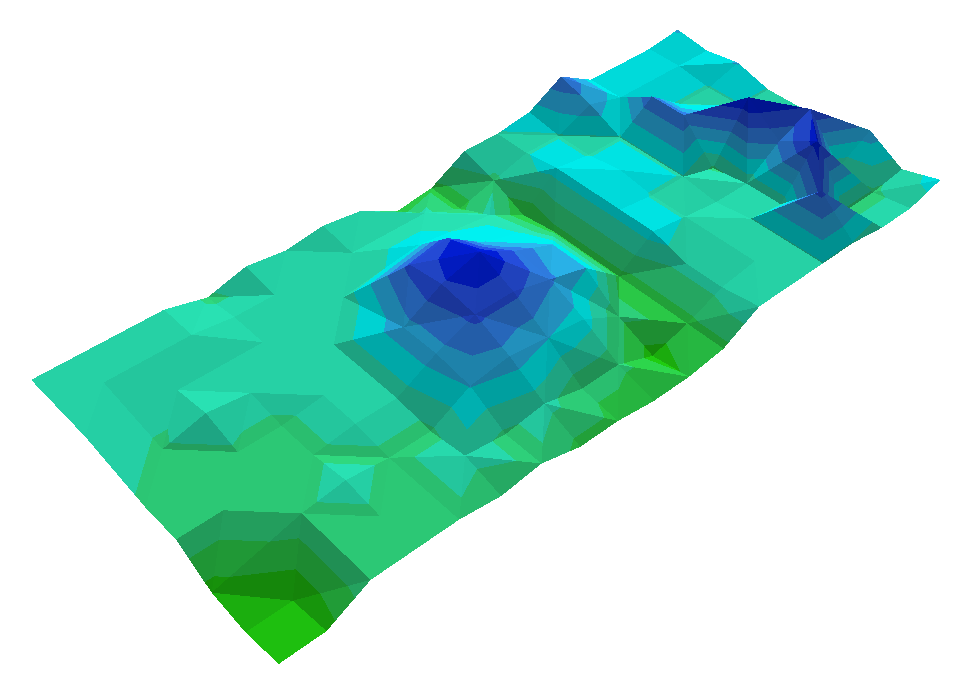

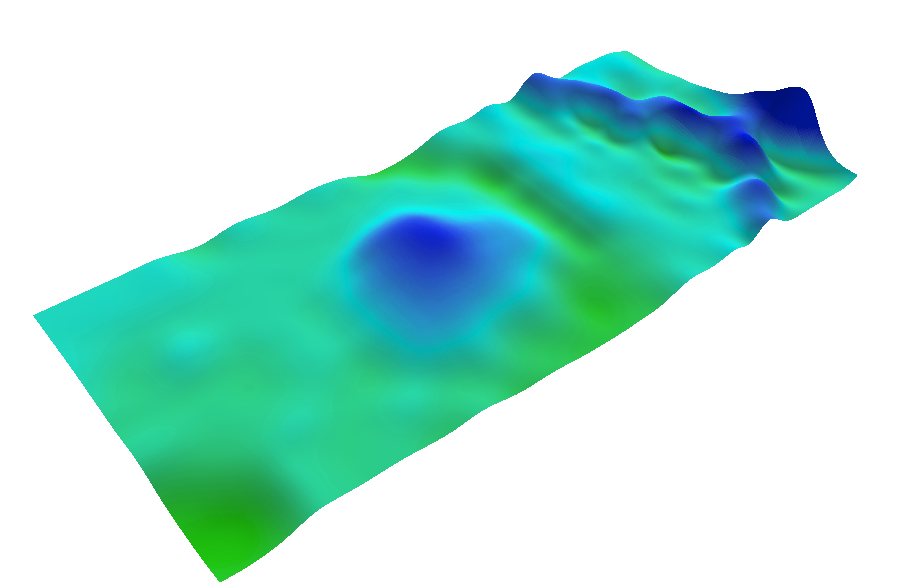

Within ArcMap, I was able to open the Priory Geodatabase that was given to us by Professor Hupy. This contained a number of files including imagery, 5 meter contour lines, 2 foot contour lines, DEM (digital elevation model), and the navigation boundary. The DEM allows for a nice and easy to understand visual using continuous data instead of the lines that are used to display the contour lines. From there I did a significant amount of playing around with the colors, and layers to appropriately display the maps. My objectives were to minimize clutter, yet provide maps that would help me discern the geographic locations in the field. Below are some of the individual files and their display image.

On both maps I included the imagery to get a sense of relative location (e.g. east of the Priory building and half was down the east facing slope). I also wanted to include the 5 meter contour lines rather than the 2 foot ones, because the 2 foot contours were extremely close together which impaired the ability to see the imagery, DEM, and other features that I wanted to highlight (see figure 6).

Using a scale bar in meters is vital to using the pace count that was established at the beginning of this lab. I chose smaller increments in order to be able to measure the distance during the actual navigation better. In addition, a grid system was used for both maps to improve the likelihood of accurate navigation. DEM and contour lines seemed to be an effective way to visualize elevation in both a static (contour lines) and dynamic (DEM) way.

For the GCS map, I wanted to focus on elevation change. The more darkly shaded areas depict lower elevation. One of the ideas I played around with was inverting the gray scale to show higher elevation in black. However that took away from more imagery than I was hoping for, even after changing the transparency.

In addition to the UTM coordinate system, I projected the image to Transverse Mercator. I chose this because UTM was based on this projection and it is widely used in mapping. Its angles are accurate everywhere, ensuring preserved direction. For this map I wanted to emphasize less elevation change and more imagery in combination with the DEM. After the actual field aspect of this lab, I will have a better understanding of what works and what does not.

On both of the maps, I left space outside of the navigation boundary to give a greater sense of the surrounding area. In case I get really lost and end up outside of the boundary, I will at least have a little leeway for error and can hopefully use the map to correct myself, that or cry for help!

Discussion

One of the concepts I found the most interesting was watching the shape of the navigation boundary change between the two different coordinate systems that I used. The GCS is more compressed and rectangular, whereas the UTM is a perfect square. I was thoroughly surprised at how challenging it is to include all of the components that you want to in a simple map. Overall I think in the field I will like the UTM map better, just because it appears that I will be able to glean more from it without analyzing it. Given that all of the data is the same and that only the GCS and projections are different, it will be interesting to see which one works better in the end for navigational purposes.

Seeing as I have not tested out my own map yet, I am rather curious to find out how the two maps vary in accuracy. When looking at the UTM zones, it was very evident that Eau Claire is right on the edge of zones 15N and 16N. I'm curious to know how much (or if) the location within the zone affects the accuracy of the navigational properties. Another issue that could arise in the future is the projection that I chose for the UTM. There could potentially be a better projection that I am unaware of using, thus making it more difficult to navigate in the field. I would like to spend more time analyzing the pros and cons of different coordinate systems and projections. If I need to make more navigation or preservation maps in the future I will be able to have a better handle on which projections to use.

Conclusion

I believe this lab will greatly help in future map creation. Normally when I have made a map I have not needed to worry about the map user implementing it for navigation purposes- it has normally served as a tool for visual geospatial description. Considering the coordinate system and projection for this type of map is vital in its accuracy and reliability. Another aspect of importance was the grid system. This tool will be important in the navigation process when we try to find the navigation points at the Priory in the future.

Considering that meters is the unit we used to create our 100 meter pacing, I am expecting the UTM to be easier to use in the field. However, there is always the issue with elevation change in that one often over or under compensates the pacing because of uneven terrain.

|

| (Figure 3) GCS using latitude/longitude and degrees/minutes/seconds |

In order to be able to use our map in the field without measuring tape or lasers, we conducted a pace count for ourselves to see how many steps it would take us to walk 100 meters. We measured this by using a laser which digitally calculated the distance from one end of the desired field to the other. We proceed to walk the 100 meters while counting every time your left foot hit the pavement; this was done one more time to ensure accuracy. My personal pace count for 100 meters is 72 steps.

Methods

Within ArcMap, I was able to open the Priory Geodatabase that was given to us by Professor Hupy. This contained a number of files including imagery, 5 meter contour lines, 2 foot contour lines, DEM (digital elevation model), and the navigation boundary. The DEM allows for a nice and easy to understand visual using continuous data instead of the lines that are used to display the contour lines. From there I did a significant amount of playing around with the colors, and layers to appropriately display the maps. My objectives were to minimize clutter, yet provide maps that would help me discern the geographic locations in the field. Below are some of the individual files and their display image.

|

| (Figure 5) 2 Foot Contour Lines. This file was not used in either map because of its clutter. The DEM was able to depict similar characteristics without all of the lines (see below). |

|

| (Figure 7) Gray Scale DEM. The darkest areas show the lowest elevation, and the lightest areas show the highest elevation. |

On both maps I included the imagery to get a sense of relative location (e.g. east of the Priory building and half was down the east facing slope). I also wanted to include the 5 meter contour lines rather than the 2 foot ones, because the 2 foot contours were extremely close together which impaired the ability to see the imagery, DEM, and other features that I wanted to highlight (see figure 6).

|

| (Figure 6) 5 Meter Contour Lines and 2 Foot Contour Lines. This display was avoided to reduce clutter on the map. |

For the GCS map, I wanted to focus on elevation change. The more darkly shaded areas depict lower elevation. One of the ideas I played around with was inverting the gray scale to show higher elevation in black. However that took away from more imagery than I was hoping for, even after changing the transparency.

|

| (Figure 8) Coordinate System: NAD 1983 (2011) |

|

| (Figure 9) Coordinate System: NAD 1983 UTM Zone 15N. Projection: Transverse Mercator |

Discussion

One of the concepts I found the most interesting was watching the shape of the navigation boundary change between the two different coordinate systems that I used. The GCS is more compressed and rectangular, whereas the UTM is a perfect square. I was thoroughly surprised at how challenging it is to include all of the components that you want to in a simple map. Overall I think in the field I will like the UTM map better, just because it appears that I will be able to glean more from it without analyzing it. Given that all of the data is the same and that only the GCS and projections are different, it will be interesting to see which one works better in the end for navigational purposes.

Seeing as I have not tested out my own map yet, I am rather curious to find out how the two maps vary in accuracy. When looking at the UTM zones, it was very evident that Eau Claire is right on the edge of zones 15N and 16N. I'm curious to know how much (or if) the location within the zone affects the accuracy of the navigational properties. Another issue that could arise in the future is the projection that I chose for the UTM. There could potentially be a better projection that I am unaware of using, thus making it more difficult to navigate in the field. I would like to spend more time analyzing the pros and cons of different coordinate systems and projections. If I need to make more navigation or preservation maps in the future I will be able to have a better handle on which projections to use.

Conclusion

I believe this lab will greatly help in future map creation. Normally when I have made a map I have not needed to worry about the map user implementing it for navigation purposes- it has normally served as a tool for visual geospatial description. Considering the coordinate system and projection for this type of map is vital in its accuracy and reliability. Another aspect of importance was the grid system. This tool will be important in the navigation process when we try to find the navigation points at the Priory in the future.

Considering that meters is the unit we used to create our 100 meter pacing, I am expecting the UTM to be easier to use in the field. However, there is always the issue with elevation change in that one often over or under compensates the pacing because of uneven terrain.